Revisiting The AI Pitch

Introduction

Akash Network made an impressive run earlier this year as token price went up from the 0.50 level to 1.60 at its local peak riding on the Artificial Intelligence narrative.

As the hype on this relevant topic gradually sinks and token price normalizes to the current level; it would be a good time to revisit the fundamentals of Akash Network. As we are marching into the new year with some identifiable AI catalysts, Akash could make a comeback and remind the market what they were excited about.

What is Akash Network

In a nutshell, Akash Network is a layer 1 chain built by the Cosmos SDK starting out as a decentralized computing network; which matches the demand for cloud computing services such as memory storage with the supply side of the market.

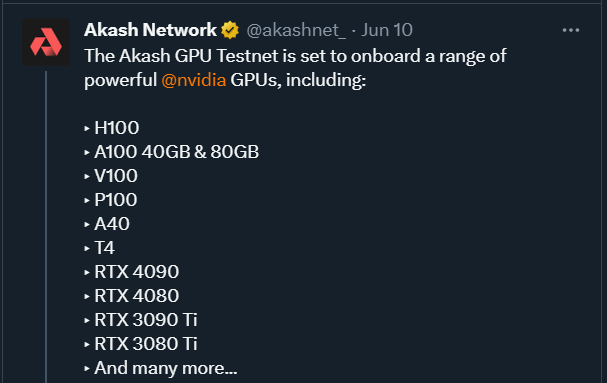

Earlier this year the Akash team launched AkashAI; a peer to peer marketplace to share computing power specifically for AI use cases by tapping into the idle Nvidia GPUs; and subsequently kicking off the impressive rally as the market wakes up to it.

The Market Opportunity

The Artificial Intelligence market is a billion dollar opportunity; and Graphic Processing Units lies in the center of it. GPUs empower the entire Artificial Intelligence market with its ability to process computational tasks more efficiently and simultaneously; and natively grows as more AI use cases emerge.

As for upstream GPU providers such as Akash Network; the opportunity set is massive as high density GPUs are scarce given the current supply chain constraints. In order to address this market pain point; Akash Network effectively makes idle Nvidia GPUs available to average users targeting AI specific use cases .

The AI market consists of sub categories such as the training of foundational models; fine tuning of trained models; and inferencing of models in which the model answers queries from users. As of now the training market is still more than 90% of the Generative AI market as hyperscalers such as Google, Microsoft and Meta are competing to develop their foundational model.

The revenue opportunity for upstream GPU providers for the training market could be quantified as the necessary amount of high density GPU required by each hyperscalers to train their foundation model.

The revenue opportunity as of now could be in the tens of billion dollar range; if we assume hyper scalers in both the US and China would be training 5 to 10 foundation models with trillions parameters; and also 10 to 30 smaller models with tens of billions of parameters at the same time.

According to Nvidia’s research it requires more than 3k GPUs to train a Large Language Model with 1 trillion parameters; and each A100 currently sells for 25k USD. Collectively the market as of today would be worth 3 to 5bn; and comes with an expected stagnant future growth as the demand shifts towards the inference and fine tuning market. That being said; the training market is not where Akash Network is planning to break into.

The fine-tuning and inferencing market, on the other hand, is relatively nascent and even smaller in size as of now; and might be considered as slightly overblown as of the scale today; as we are yet to see more daily use cases built on top of AI.

That being said, the category could overtake the training as the market shifts to inferencing or even fine tuning trained models as it matures. As of now the inferencing market is estimated to be at the single digit billion dollar range; and is expected to do a 10x in the next 5 to 7 years.

For upstream GPU players the revenue opportunity in the inferencing market could be defined as the portion of the cost borne by cloud service providers to offer cloud AI computing services; and that premises on the penetration that the likes of Chat GPT and Bard AI could achieve; which could still be a question mark.

The Pitch

The value proposition of AkashAI is clear and straightforward; the decentralized network makes high density Nvidia GPUs readily available to users and it solves deep rooted problems on both the demand and the supply side.

The accessibility to Nvidia chips are in fact part of the moat. Currently more than 80% of AI specific workload is processed by Nvidia’s GPUs simply because Nvidia’s chips are known to be more performant than other existing options.

The company also created its moat by developing ecosystem softwares such as NVLink and CUDA. For instance; NVLink enables the communication between GPUs and CPUs by enabling the delivery and transmission of data from shared pools of memory at lightning speed; which effectively lifts the max bandwidth per GPU; whereas CUDA gives users access to various machine learning libraries such as TensorFlow.

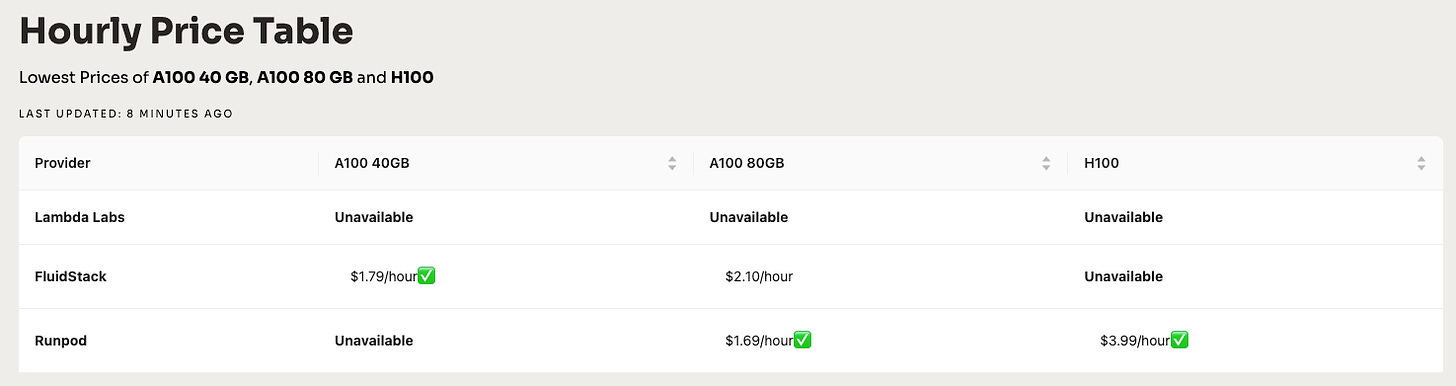

To the demand side; the options to get access to computing power for Artificial Intelligence specific workload is limited. Users could either rent it through hyperscalers like Microsoft Azure or centralized providers such as Lambda Labs or CoreWeave which could result in availability issues as hyper scalers prefer to work with established AI corporate; or directly purchase it from GPU manufacturers which could be extremely cost inefficient.

Akash Network effectively solves the problem by bringing high density GPUs to where the models are instead of the other way round; not to mention that it also offers access to the aforementioned Nvidia ecosystem software. What is more; Akash Network could potentially upsell existing services that are more heterogeneous such as CPUs and memory storages; as what they call the “Super Cloud”.

Recently; Akash also integrated with Hugging Face to enable multiple AI models to be run on the network which is open sourced that potentially create more use cases; essentially bringing the utility back to the network itself.

To the supply side Akash Network essentially offers extra cash flow to centralized GPUs providers as users could gain access to the computing power on demand and prepayment is not required.

The GPU supply shortage problem has continued to exacerbate due to reasons such as supply chain limitations and manufacturing bottleneck; as demand for AI is piling up. To make things worse; technology behemoths such as Google and Meta are hoarding high density GPUs to build their own competitive edge.

The sweet spot of the market lies with centralized providers who historically have good relationships with the GPU providers; and managed to receive the highly wanted inventory. Akash Network is working with the likes of CoreWeave and Lambda Labs to make those chips available to average users by integrating the network into their offerings, bringing together a highly fragmented market together on one single decentralized platform.

Valuation

In terms of valuation; I like to think the fees generated by Akash Network currently is not indicative of the value of the project; not to mention the team is also pivoting away from the existing cloud CPU business.

As mentioned above the components that move the business are the numbers of high density Nvidia GPU Akash Network could grant users access to; and hence the angle here should be to value the cash flow each GPU could potentially bring to the network itself multiplied by the take rate of the system.

From a unit economics perspective; the rental price for A100 is at 2 USD per hour as of now; and hence the annualized GMV; defined as the value each chip could generate, would be at the 10k USD range assuming we apply a reasonable utilization rate to the calculation.

Assuming Akash Network grants access to 10k Nvidia A100 chips; that would effectively be translated into an annualized merchant value of 175mn. Akash Network currently takes a 20% platform take rate on top of the GMV which would eventually bring the top line revenue to the 35mn range.

From there we could easily apply an P/F ratio by benchmarking other DePIN projects in the market which trades anywhere from 300x to 700x to derive the upside potential of the project. According to the line logic; the upside potential from the current trading market cap could suggest an outsized return.

It is also important to factor in potentially reduction in take rate; and variables such as whether or not Akash Network manages to gain access to the most wanted chips when newer versions emerge; and when the supply constraints are solved. As of now the above was the pitch and what gets the market excited at the first place.

Old generation GPUs would always have a market demand as the technology advances and suits for specific use cases; and that sould subsequently be reflected by the adjusted hourly rental price in order to make the calculation relevant in the long run.

Conclusion

As the market is focusing on the Bitcoin ETF narrative at the moment; it requires multiple catalysts to be in place in order to bring back to focus to Artificial Intelligence coins. Catalysts I am paying attention to would be the Tesla AI Day that is scheduled to happen by early next year and the potential launch of GPT 5.

That being said; the fundamentals of the project has not changed compared to few months ago when the market was euphoric; and this should be the best time we position ourselves accordingly.

Good analysis👍